Dagstuhl Egraphs

HOLY FUCK.

That was pretty neat.

I was lucky enough to be invited to a workshop for egraphs and compilers https://www.dagstuhl.de/seminars/seminar-calendar/seminar-details/26022 . My thanks to George, Chris, Michel, and Max for organizing! Total adult nerd summer camp. Never done anything quite like that. You’d wake up groggy each morning and then accidentally find yourself raving over muesli and yogurt on your opinions about “context”. I had almost an alarming energy considering I was was sleeping so terribly. I think I could talk shop forever and basically have no one local that I wouldn’t be bad subjecting to hours of egraph minutiae and speculation.

It’s funny, I do recall the week before leaving being like “oh jesus why did I agree to haul my ass to the german countryside when I’ve got a cat right here” but it was definitely worth it.

I also gave myself the weekend after for a little sightseeing. I am not in Europe often enough to not get a little kick out of seeing all the signs and the cut of pants looking weird. I did one day in Frankfurt, a day trip to Heidelberg and a day trip to Mainz. Heidelberg was sweet. Mainz had a tough time comparing (also it was a nasty rainy day).

I came away with maybe a couple budding collaborations, which is something I’ve wanted to try to foster.

- Egraphs benchmarks would be nice. Even just terms/programs to be optimized https://github.com/philzook58/egg-bench

- I’m still stoked about ground knuth bendix completion as a framework. Continue tinkering on that. Also implement regular unfailing completion with variables. I think AC ground completion ought to be an AC egraph.

- Maybe an ebook collecting up the basics of egraphs, implementations, and the zoo of variants. It’s hard to pick a tool. Jupyterbook, mdbook, mkdocs, and quarto, or just doing something bespoke. I’m leaning towards quarto. A

microeggin rust specialized to symbol lang I think could be nice. - Still need to discuss with Rudi to understand slotted better.

- I’m very pleased that co-egraphs https://www.philipzucker.com/coegraph/ may be going somewhere. I thought this was one of the few kind of original-ish things I’ve done. Sam has a very different perspective on what such a thing might be

- The refinement of boolean expressions with don’t care is a really good problem

SMT tutorial

I was assigned to give a basics of SMT. Maybe I took my task too seriously, but it was actually quite good to review and learn about theory combination just for me. I didn’t end up even presenting any of the interesting stuff.

Practice run of introduction to SMT youtube video

https://colab.research.google.com/github/philzook58/philzook58.github.io/blob/master/pynb/2026_smt_talk_dahgstuhl_egraphs.ipynb Inline this notebook. Why not.

Egraphs Modulo Theories

I had a little breakout session talking about egraphs modulo theories.

It’s all about the interface

Extract, canon, reinsert

class Theory(Protocol):

def canon(t : Term) -> Term:...

Nelson Oppen-ish Propagators

class Eq(NamedTuple):

negate : bool

lhs : EId

rhs: EId

class Theory(Protocol):

def assert_eq(self, a : EId, b : EId): ... # union

def assert_diseq(self, a : EId, b : EId): ...

def propagate(self) -> list[Eq]

Generalize Union Find

You can swap ouught the union find on a sort by sort basis to get some interesting theory smarts.

class Theory(Protocol):

type EId # theory specific associated type of structured Eid

def makeset(self) -> EId: ...

def union(self, a : Eid, b : Eid): ...

def canon(self, a : Eid) -> Eid: ... # find

Gaussian elimination, group union find, grobner basis, multiset completion fit this form. Slotted needs a bit more

Refinement egraph via inequality union find.

class Theory(Protocol):

def makeset(self) -> EId: ...

def find(self, a : EId) -> EId: ... # just normalized with respect to equality, not inequality

def union(self, a : EId) -> EId: ...

def assert_le(self, a : EId, b : EId): ...

def is_le(self, a : Eid, b : EId) -> bool: ...

def iter_up(self, a : EId) -> list[EId]: ...

def iter_down(self, b : EId) -> list[EId]: ...

Generalized Ground Knuth Bendix

The notion of what the interface here is less crisp for me. But there is a theory specific notion of term, context and overlapping. The theories somehow embed in each other as opaque constants.

I don’t think I persay need a well specified compositional interface, since I’m fine with making a big mudball EGraph object

class Theory(Protocol):

type Term

type Ctx

def plug(self, t : Term, ctx : Ctx) -> Term: ...

def overlaps(self, t : Term, s : Term) -> list[Ctx]: ...

# find the locations where s is a subterm of t for critical pairs

# but also sufficient for rewriting

def compare(self, t : Term, s : Term) -> Ordering: ... # well founded Term ordering

def

Ematching modulo theories and SMT

A finite egraph can encode the truth table of And, Or, Not. Ematching over this is solving a SAT or allSAT problem.

- Top down ematching is tableau

- Bottom up is truth table

- Relational (using tries) ~ BDD

- What is CDCL(T)? Guess and propagate

Max is clearly more lattice brained than I am. Also discussed the idea of instead of outlawing each model to iteratively allSAT an SMT solver, instead have some ordering and ask for all solutions above some cutoff? But the ordering should be algorithm aware.

Bits and Bobbles

Refinement Egraphs

Generalized Rewriting

Slotted Egraphs

Centralizing theories in MCSAT https://hal.science/hal-01935591/document

https://ora.ox.ac.uk/objects/uuid:68f76f3a-485b-4c98-8d02-5e8d6b844b4e/files/mc4dc4637d194fcfc38b36b9be6cead80 abstracting satisfaction

Embedding egraphs into graph rewriting. eid nodes but also “share”/dup nodes. Interleave optimal reduction stuff and egraph stuff

Slotted egraphs are python. Keyword and optional arguments are perfectly reasonable as simple features. keyword arguments are not ordered. It is possible to make a variation of term rewriting where terms have these features. An enode is f(e17(m=i,n=j), e4(q=j), k=i, l=j) e42(m=i, n=j) is a renamed id. e43(a=i, b=i, c=j) = e3(x=j,y=i) is an equation handled by slotted union find.

Connection of Normalization by Evaluation to egraphs? Extract term (reflect), rewrite, and reinsert (eval) is evocative, but really it is the opposite direction since egraph is more model-like and term is syntax.

Build simplest possible version of SSA is functional programming style egraph. Blocks correspond to a function symbol with block args. Eqsat rules give body of block. Extraction proceeds from entrypoint onward. Any block used must also have a definition extracted. Or do a global extraction somehow

Atomic type theory using slots/nominal as extra abstract elements. The meaning of type Bool in context is set with extra elements [[a : Bool, b : Bool |- Bool]] = {a, b, True, False}. Model distinction between definitional and propositional equality.

Stratified EPR, effectively ground knuth bendix, and slotted egraphs. If there are variables, but they are guarded by a var(X) constructor that never has anything except variables in it, there cannot be any interesting critical pairs in knuth bendix, and the system is effectively ground. It will complete successefully. This gives you a something a a little bit like alpha invariant names. Likewise EPR let’s you have quantified equations that will be decidable by z3’s MBQI (model based quantifer instantation). This terminates for the same reason datalog does, there are only a finite number of terms buildable from the constants avaiable in the problem that one can instantiate a single outer quantifier with. Stratified EPR allows function symbols just so long as the sort discipline again still restricts the building to a finite number of terms. This is like a grammar that doesn’t have a recursive loop in it. It will only describe a finite number of terms/parses. I really went in half cocked and jetlagged trying to explain this at dinner on Sunday. It’s a complicated thing to talk about anyway.

Herding Cats https://dl.acm.org/doi/10.1145/2627752

egraph-zoo https://github.com/sdiehl/typechecker-zoo/tree/main

https://github.com/eytans/easter-egg

Tutorials

- egraphs - max

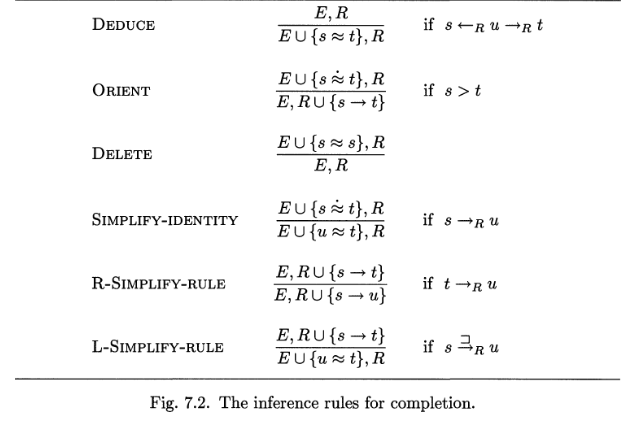

- Term rewriting and Knuth Bendix and strategies. Sebastian and Michel

- SMT - me :)

- Theorem proving

- Compilers

SSA is real. They actually mean the ordering and the names despite me thinking of it as sugar over SSI makes new names for each branch of an if so that you have a unique name for a variable as it appears in a context. Analysis info may change on that varianle there.

- Hardware George Sam. Industry verification tools need to be able to sign off on what you’ve done.”Choice networks” is a hardware compiler topic that is a realtive of egraphs, like VSA version space algebra.

-

Oliver egglog intro. I really do not understand some of the keyword changes. They seem so completely orthogonal to my thinking of the meaning of the egraph

AnyDSL and mimIR Bombe Optitrust Zach Course

Ground KB Hack Session

I suggested a hack session to implement ground knuth bendix in rust. I think this was a good thing to start. The discussion I think went largely over to the idea of preprocessing or co-processing rules using knuth bendix.

div-rem is a notion of context for polynomials

What are analyses in this framework? Maybe a nice assymmettric ground completion. Or just something bolted on? The old idea of internalized / abstract / undecidable groups / lattices on the union find.

Approximation

George. Ultrametrics have better transitivity properties?

t2 =eps t1 non transitive. Just a grpah

asymptotic equality + gluing? t1 = t2 + O(eps^3)

%%file /tmp/approx.egg

(datatype Math

(Add Math Math)

(X)

(Y)

(Lit i64)

)

(function approxeq (Math Math) i64 :merge (min old new))

; reflexivity

(rule ((= e (Add a b))) ((set (approxeq e e) 0)))

(set (approxeq (X) (X)) 0)

(set (approxeq (Y) (Y)) 0)

; symmetry

; This doesn't work?

;(rewrite (approxeq a b) (approxeq b a))

(rule ((= e (approxeq a b))) ((set (approxeq b a) e)))

; "transitivity", shortest path

(rule

((= (approxeq a b) d1)

(= (approxeq b c) d2))

((set (approxeq a c) (+ d1 d2)))

)

; "congruence" for Add. Lifshitz = 1. There are more variations with different demand

(rule

((= e (Add a b))

(= d1 (approxeq a a1))

(= d2 (approxeq b b1)))

((set (approxeq (Add a1 b) (Add a b)) (+ d1 d2)))

)

(set (approxeq (X) (Y)) 1)

(set (approxeq (Y) (Z)) 1)

(let t (Add (X) (Y)))

(run 10)

(print-function approxeq)

Slotted

rename

extra congurence rule

egraph extended by fee variables

a = b bijection sigma : Var- > Var

--------------

a sigma = b sigma

f(e1(k,j), ) = e17(k)

f(e(i,j), e(k)) = var(i)

All sigma equalities applied are implied

x - x = 0

y - y = 0

semantics, give me a name and I give you a set of all terms filled in with

Binders are actually from the same feature that makes x - x = 0 = y -y

f(e(i,j)) = e(j)

f(i,j) =

kleene algerba

Optimism

need names https://arxiv.org/abs/2511.20782

x = 1

while 1:

x = x + 1 * 5

middle fixed point. Do so by not starting at bottom but at some intermediate point? There is an arxiv paper.

The boldness to do something unsound that you can skirt doing extraction is not in me.

Perhaps Answer Set Programming and the idea of a stable model could be useful?

KB

pareto frontier smart consutrctor using branch and bround preporcessing like knuth bendix two orderings. The ordering for KB and the optimization ordering. Perhaps separate perhaps not

- a more hackable full knuth bendix.

- Nasty user orderings

- Partial knuth bendix solutiison

- Unfailing

Ground KBO on f with different arity might have a problem. Maybe “make part of name” the arity. f/3 f/2 f/1

You don’t need deduce in the ground case?

E,R',G,T

Separate R into R = R' U G where G are the ground rewrite rules. They are the egraph combined with T is a termbank used for relevancy

EqSat as an unfair unfailing KB strategy. Use “Decide” overlaps between T,R’,G to generate new equations. Huet is an example of a completion strategy (see traat)

Rudi had a good point that keeping a “normalizing” egraph makes Theory matching (module R’) necessary. For example consider dealing with associativty in R’ by fully right associating. Makes pattern matching harder

Some things support a notion of normalizing an equation to isolate a largest term. The group union find from the KB perspective does this

{x^2 + 3 = y - 2} U E, R

--------------------------- norm eq

{x^2 = y -5} U E, R

Defining fresh constants is a conservative extension hopefully. Where e goes in the term ordering is a problem though. And changing your ordering mid stream is incomplete. So the status of the correctness of this rule is qeusitonable. It seems most correct if e has a term ordering adjacent to t https://kluedo.ub.rptu.de/frontdoor/deliver/index/docId/358/file/seki_34.pdf “About Changing the Ordering during Knuth Bendix Completion” Sattler-Klein

E, R e fresh

--------------------- define

{e = t} U E, R

https://titan.dcs.bbk.ac.uk/~carsten/satsmtar2024/satsmtar2024.pdf carsten. Quite a deck

https://www.philipzucker.com/egraph-ground-rewrite/

Egg-bench session

- Breadth First Term search as baseline?

- Stochastic equational search?

- Simple bad egraph

https://github.com/philzook58/egg-bench https://www.philipzucker.com/rewrite_rules/ https://www.semgus.org/ https://sygus.org/comp/2019/

Co-egraphs

https://www.philipzucker.com/coegraph/

https://joerg.endrullis.de/publications/infinitary%20rewriting/

ground infinitary knuth bendix?

Rational Tree Unification with CF

https://cfbolz.de/posts/dfa-minimization-using-rational-trees-in-prolog/ https://en.wikipedia.org/wiki/Cycle_detection https://en.wikipedia.org/wiki/Cycle_detection#Floyd's_tortoise_and_hare https://en.wikipedia.org/wiki/Pollard%27s_rho_algorithm

CF has a python file now. Where is it? here https://gist.github.com/cfbolz/ffd49e7a654c5ba224f73c1fc39673d0

import pytest

from dataclasses import dataclass, field

class Expr:

pass

@dataclass

class Var(Expr):

name : str

bound : Expr | None = None

def __eq__(self, other):

return isinstance(other, Var) and self.name == other.name

def __hash__(self):

return hash(self.name)

@dataclass

class Term(Expr):

name : str

children : list[Expr] = field(default_factory=list)

def __eq__(self, other):

return isinstance(other, Term) and self.name == other.name and self.children == other.children

def __hash__(self):

return hash((self.name, tuple(self.children)))

class UnificationError(Exception):

pass

def unify(e1, e2):

if isinstance(e2, Var):

e1, e2 = e2, e1

if e1 is e2:

return

match (e1, e2):

case (Term(name1, children1), Term(name2, children2)):

if name1 != name2:

raise UnificationError

if len(children1) != len(children2):

raise UnificationError

for t1, t2 in zip(children1, children2):

unify(t1, t2)

case (Var(name1, None), _):

e1.bound = e2

case (Var(name, bound), _):

e1.bound = e2 # this is all that's needed for supporting rational trees

unify(bound, e2)

case _:

raise UnificationError

def test_simple():

v1 = Var("X")

t1 = Term("a")

unify(v1, t1)

assert v1.bound == t1

with pytest.raises(UnificationError):

unify(v1, Term("b"))

def test_rational_trees():

X = Var("X")

t1 = Term("a", [X])

unify(X, t1)

unify(t1, t1) # loop

Y = Var("Y")

t2 = Term("a", [Y])

unify(Y, t2)

unify(t1, t2) # loop

def linear_unify(e1, e2, subst=None):

if subst is None:

subst = {}

subst = subst.copy() # never mutate existing substs

todo = [(e1, e2)]

while todo:

e1, e2 = todo.pop()

if isinstance(e2, Var):

e1, e2 = e2, e1

match (e1, e2):

case (Term(name1, children1), Term(name2, children2)):

if name1 != name2:

raise UnificationError

if len(children1) != len(children2):

raise UnificationError

for t1, t2 in zip(children1, children2):

todo.append((t1, t2))

case (Var(name1, _), Var(name2, _)) if name1 == name2:

pass

case (Var(name1, _), _):

bound = subst.get(name1)

subst[name1] = e2

if bound is not None:

todo.append((bound, e2))

case _:

raise UnificationError

return subst

def test_rational_trees_linear():

X = Var("X")

t1 = Term("a", [X])

subst = linear_unify(X, t1)

assert subst == {"X": t1}

linear_unify(t1, t1, subst) # loop

Y = Var("Y")

t2 = Term("a", [Y])

subst2 = linear_unify(Y, t2, subst)

assert subst2 == {"X": t1, "Y": t2}

subst3 = linear_unify(t1, t2, subst2) # loop

assert subst3 == {"X": t2, "Y": X}

def test_rational_trees_linear2():

X = Var("X")

t1 = Term("a", [X])

subst = linear_unify(X, t1)

assert subst == {"X": t1}

linear_unify(t1, t1, subst) # loop

Y = Var("Y")

t2 = Term("a", [Term("a", [Term("a", [Y])])])

subst2 = linear_unify(Y, t2, subst)

assert subst2 == {"X": t1, "Y": t2}

subst3 = linear_unify(t2, t1, subst2) # loop

number = -1

def new_str():

global number

number += 1

return f"state{number}"

@dataclass

class DFA:

final : bool

on_a : "DFA | None"

on_b : "DFA | None"

name : str = field(default_factory=new_str)

def is_same(a1, a2):

todo = [(a1, a2)]

while todo:

a1, a2 = todo.pop()

if a1 is a2 is None:

continue

if a1 is None and a2 is not None:

raise UnificationError

if a1 is not None and a2 is None:

raise UnificationError

if a1.name == a2.name:

continue

if a1.final != a2.final:

raise UnificationError

a1_on_a = a1.on_a

a1.on_a = a2.on_a

todo.append((a1_on_a, a2.on_a))

a1_on_b = a1.on_b

a1.on_b = a2.on_b

todo.append((a1_on_b, a2.on_b))

def test_dfa_is_same_linear():

dfa1 = DFA(False, DFA(True, None, None), None)

dfa2 = DFA(False, DFA(True, None, None), None)

is_same(dfa1, dfa2)

def test_dfa_loop():

dfa1 = DFA(True, None, None)

dfa1.on_a = dfa1

# a*

is_same(dfa1, dfa1)

dfa2 = DFA(True, None, None)

dfa2.on_a = dfa2

is_same(dfa1, dfa2)

dfa3 = DFA(True, DFA(True, None, None), None)

dfa3.on_a.on_a = dfa3

is_same(dfa1, dfa3)

def unify_with_tabling(e1, e2, table=None):

if table is None:

table = frozenset()

if isinstance(e2, Var):

e1, e2 = e2, e1

if (e1, e2) in table:

return

table = table | {(e1, e2)}

match (e1, e2):

case (Term(name1, children1), Term(name2, children2)):

if name1 != name2:

raise UnificationError

if len(children1) != len(children2):

raise UnificationError

for t1, t2 in zip(children1, children2):

unify_with_tabling(t1, t2, table)

case (Var(name1, None), _):

e1.bound = e2

case (Var(name, bound), _):

unify_with_tabling(bound, e2, table)

case _:

raise UnificationError

def test_unify_with_tabling():

X = Var("X")

t1 = Term("a", [X])

unify_with_tabling(X, t1)

unify_with_tabling(t1, t1) # loop

Y = Var("Y")

t2 = Term("a", [Y])

unify_with_tabling(Y, t2)

unify_with_tabling(t1, t2) # loop

def linear_unify_with_tabling(e1, e2, subst=None, table=None):

if subst is None:

subst = {}

if table is None:

table = frozenset()

subst = subst.copy() # never mutate existing substs

todo = [(e1, e2, table)]

while todo:

e1, e2, table = todo.pop()

if (e1, e2) in table:

continue

if isinstance(e2, Var):

e1, e2 = e2, e1

table = table | {(e1, e2)}

match (e1, e2):

case (Term(name1, children1), Term(name2, children2)):

if name1 != name2:

raise UnificationError

if len(children1) != len(children2):

raise UnificationError

for t1, t2 in zip(children1, children2):

todo.append((t1, t2, table))

case (Var(name1, _), _):

bound = subst.get(name1)

subst[name1] = e2

if bound is not None:

todo.append((bound, e2, table))

case _:

raise UnificationError

return subst

def test_rational_trees_linear_with_tabling():

X = Var("X")

t1 = Term("a", [X])

subst = linear_unify_with_tabling(X, t1)

assert subst == {"X": t1}

linear_unify(t1, t1, subst) # loop

Y = Var("Y")

t2 = Term("a", [Y])

subst2 = linear_unify_with_tabling(Y, t2, subst)

assert subst2 == {"X": t1, "Y": t2}

subst3 = linear_unify_with_tabling(t1, t2, subst2) # loop

assert subst3 == {"X": t2, "Y": X}

def test_rational_trees_linear2_with_tabling():

X = Var("X")

t1 = Term("a", [X])

subst = linear_unify(X, t1)

assert subst == {"X": t1}

linear_unify(t1, t1, subst) # loop

Y = Var("Y")

t2 = Term("a", [Term("a", [Term("a", [Y])])])

subst2 = linear_unify_with_tabling(Y, t2, subst)

assert subst2 == {"X": t1, "Y": t2}

subst3 = linear_unify_with_tabling(t2, t1, subst2) # loop

# doesn't work:

# def unify_with_tabling_occurs_check(e1, e2, table=None):

# if table is None:

# table = frozenset()

# if isinstance(e2, Var):

# e1, e2 = e2, e1

# if (e1, e2) in table:

# raise UnificationError

# table = table | {(e1, e2)}

# match (e1, e2):

# case (Term(name1, children1), Term(name2, children2)):

# if name1 != name2:

# raise UnificationError

# if len(children1) != len(children2):

# raise UnificationError

# for t1, t2 in zip(children1, children2):

# unify_with_tabling_occurs_check(t1, t2, table)

# case (Var(name1, bound), _):

# if bound is None:

# e1.bound = e2

# unify_with_tabling_occurs_check(e1.bound, e2, table)

# case _:

# raise UnificationError

#

#

# def test_unify_with_tabling_occurs_check():

# X = Var("X")

# t1 = Term("a", [X])

# import pdb;pdb.set_trace()

# unify_with_tabling_occurs_check(X, t1)

# unify_with_tabling_occurs_check(t1, t1) # loop

#

# Y = Var("Y")

# t2 = Term("a", [Y])

# unify_with_tabling_occurs_check(Y, t2)

# unify_with_tabling_occurs_check(t1, t2) # loop

There is something like

- Recursive version

- Loop version

- explicit tabling set version. Keep set

I feel like there is a dumber more clear version in here somewere. Least and greatest fixed point in what ordering? Vicious Circles book.

Rational tree interpreters https://arxiv.org/abs/cs/0403028 An Application of Rational Trees in a Logic Programming Interpreter for a Procedural Language

Tabling rational terms coinduction https://arxiv.org/abs/1405.2794 https://www.swi-prolog.org/pldoc/man?section=cyclic

Told CF about CoPy. I think this is really neat https://nepls.org/Events/35/abstracts.html#08c03fd7

https://github.com/memoryleak47/pydull Rudi’s functional interpreter

A toy interpreter of two instructions

import sys

from rpython.rlib.jit import JitDriver

INC = "INC"

JMP = "JMP"

jitdriver = JitDriver(greens=['pc', 'prog'], reds=["acc"])

def run_loop(prog):

acc = 0

pc = 0

while pc < len(prog):

jitdriver.jit_merge_point(pc=pc,

acc=acc,

prog=prog)

if acc % 1000000 == 0:

print acc

instr = prog[pc]

if instr == INC:

acc += 1

pc += 1

elif instr == JMP:

pc = 0

jitdriver.can_enter_jit(pc=pc, acc=acc, prog=prog)

else:

raise Exception("unknown instruction", instr)

def target(*args):

return main

def main(args):

# actually used by rpython

if len(args) != 2:

print("usage: %s <progfile>" % args[0])

return 1

with open(args[1]) as f:

prog = f.read().splitlines()

run_loop(prog)

return 0

if __name__ == "__main__":

main(sys.argv)

! pypy2 /home/philip/Documents/python/pypy/rpython/bin/rpython -Ojit /tmp/example.py

RPython Jam Session

https://github.com/memoryleak47/pydull/blob/main/pydull/interpreter.py Rudi has a toy language. https://github.com/alex/rply https://hg.sr.ht/~cfbolz/Pyrolog

Would it make sense to write a solver in rpython? Accelerate an egg/z3 style ematching virtual machine? https://leodemoura.github.io/files/ematching.pdf https://github.com/egraphs-good/egg/blob/main/src/machine.rs They are similar to prolog vritual machines. The demodulator in a superposition solver? A term rewriting engine like dedukti https://drops.dagstuhl.de/storage/00lipics/lipics-vol167-fscd2020/LIPIcs.FSCD.2020.35/LIPIcs.FSCD.2020.35.pdf ?

C interpreter in rpython for mem safety / undef detection. Like cerberus https://github.com/rems-project/cerberus

Pcode interpreter. Hard to make it correct enough in regards to manipulating program memory even for user space. Very nice though if you have easily extensible binary JIT for attahching info to.

Jitting dependent type theory - Compete with Kovacs smalltt https://github.com/AndrasKovacs/smalltt , Bowman https://types.pl/@wilbowma/115653241489432907. Kovacs does some very cool stuff I don’t understand in regards to making high perf intepreters and https://github.com/AndrasKovacs

JIT combinators from MicroHS? https://github.com/augustss/MicroHs/blob/aea0445baddd01d2c560b18f3684009abd745745/src/runtime/eval.c#L4696

SMTLIB interpreter. Verify programs in knuckledragger, output the smtlib to run. Enables fast proof by reflection in a somewhat satisfying way. Not so sure this could be a verified web server or anything. Need some methodology to attach to IO.

JIT a minikanren. Minikanren is perceived as somewhat slow (https://github.com/michaelballantyne/faster-minikanren not withstanding) compared to prolog. https://dl.acm.org/doi/10.1145/3729314

Lattice Analysis as Assymettric Completion?

contextual Egraphs

max(x,y) - min(x,y) =? abs(x - y)

color enodes and color union find edges

canonizing union find I think that the differential union find gives false negatives without rebuilding Or you need searc h

cranelift

Isle clif -> aarch64/x86

(rule (simplify insn) insn) (rule (lower ir) insn)

The jit already has an egraph - CF

eggs-bendix

Shachar

freshmans dream https://en.wikipedia.org/wiki/Freshman%27s_dream

(X + y)^2 = x^2 + y^2

x + x = 0

(x + y)^k = x^k + y^k

doesn’t scale good with k

limit rukes scheduling

map F [] = []

map F (x:xs) = F x : map F xs

derived rule

map F [x] = [F x]

rev [] = []

rev (x:xs) = rev xs ++ [x]

conditional rule not p x |- filter p x :: xs = filter p xs

olog - if rules match the same, fire them once

deobfuscation of MBA

Eun-Sun Cho https://drive.google.com/file/d/1iA0dNwBQydk3se5scSliEuW508BzamfY/view slides opaque predictaes data obsucation vmprotect code vritualizer

boosting smt solver performance on MBA pldi 21

apply rule synthesis, generate semi random exprs (ACM ccs 23 ccs 25 deobfuscation of linearMBA is solved (WISA 07)

machine learning ACL findings ‘25 egraphs ACM ccs24 poster

optimization vs deobfuscation: Shofter code can be less readable. Stoke makes unreadable stuff

Scrambler - mba based deobfuscator EMBA - ACM CCS 24

MBA based on proabbailities

GAMBA ‘S&P 23 loki benchmark UsSENIX

ProMBA ACM CCS ‘23

AST depth as a metric - similar to size. Huh.

Qsynth loki MBA solver non poly MBA solver poly

MLIR egraph

Modify existing pdl matcher

scoped hashcons

scoped hash cons hash cons id F scope

Scoped egraph scoped hash cons

class Node(NamedTuple): scope : int # scopes maybe can just be compared id : int hash : int args : tuple

class ScopedHashCons(): memos : list[

Chains of recurrences. SCEV. What about egraphing that? https://bohr.wlu.ca/ezima/papers/ISSAC94_p242-bachmann.pdf

cranelift 2

block params give you SSI because the arugment to the block ast definition is distinct from argument at call site.

The extraction problem is part of the hard part, Block definitions are rules. initial egraph with block1(a,b,c) block2(e,f) block3(x)

“seeing through block params”. Hmmmmmmm. backwards expansion of where it might be called from. Hmmmmm. Well, the previous block is the thing that does that.

Extract entry point entry(x,y,z) with estimate of opaque block costs (?) Recusrively extract called blocks in result until complete. call(block1, a, b, c)

Can I write loop rules as something like: call(block1, args1) call(block2, args2) call(block3, args3) =>

By making block a LFHOL parameter

We want to extract equation system, like in quantifier elimination work.

scoped union find to do lifting upward? use slotted style for calls?

leveled hash cons Jules merckx

aegraphs

extractors = unapply in isle match on lhs, return bindins constructors invoke on rhs return bvalue

match_args = [“unapply”]

multiextractoes and multconstructors

class EClass(): __match__args = [“unapply”] @property def unapply(self): return E(self.nodes

class E(): nodes: list = field(default_factory=list)

class Multi(): nodes :

match e: case: E(nodes): case App(): case AC(nodes): case Multi():

scoped elabration “scoped hash map” dom tree oh shit. So GVN probably has a levelled union find

online total order pick a fraction for each. Always use gaps. Don’t need general fractions. Probably some way of A bigint considered as reverse() = 0.bbbbbb 1 = 1/2 10 = 1/4 11 = 3/4 100 = 1/8

On overflow rebalance.

vec<Option

https://microsoft.github.io/z3guide/docs/theories/Special%20Relations/ tree orders. Hmm. Tree order can be tracked as special?

Piecewise linear

Have I ever done partial order?

refine = PartialOrder() refine(x, y)

Should store diseq and eq in z3 egraph. Known diseq shouldn’t be rechecked either. Does this subsume fingerprint vectors?

You can prototype refinement egraph using z3 no problem.

What does z3 do for total orders?

rebuild to make everything more evenly spaced

hash Modulo theories

- Homomorphism

- fingerprint invariants

Orderings: Homomorphism into ordered domain that’s nicer is a way to prune.

“Random” placement? [unknown, unknoen , 1/2]

x <= y compare the two vectors. Uhhh. If inconsistent we need to consider all things in between the two Hmm.No this isn’t that good.

do an epsilon egraph. What about abstract epislon analgous to abstract group or lattice.

scoped egraph for epislon power?

Numerical

scaling up roundoff nalsyis of functional data structrue programs - Eva SAS 23 dasiy fluctuat interval analysis

machine epsilon + subnormal model.

abstract domain for arrays of float groups of cells with same analysis results but can widen to group more cells reductions can

This would be a great thing to try to proce in knuckeldragger

Masters thesus simon borklund numercial aanalysis and optimizao of functional programs Exploring accuracy and pefroanmcne tradeoss inf functional array program - Knorring

Error free transformation

Go over expression, pattern match these and add them. The analog of the ematching process in that it records new bools, But it’s just boolinstan

class Pattern

def boolinstan(e, vs, pat, body, pf)) : # find body def boolmatch

def all_match(e, vs, pat): # but we might be in a weird context? traversing quantfiers. Hmm. # yeaaa. Maybe just can’t support that? # I mean, we should just let z3 do it ? yes. Duh

Wait, with help, z3 should slap this.

smt.ForAll([x,y], smt.abs(approx_add(x,y) - ( x + y)) <= eps * abs(x + y), pattern=(approx_add(x,y))

approximate egraph. epsilon edges. But these are basically supported by z3 <= right?

| This can be used to have “definitinal” facts like the | float_add() - add | <= elps |

export C from knuckledragger

rise

nick higham - flloating point book Best method to sum is minimizaing absolute value of partial sum - proof?

EFT error free transformation

PR print advanced stats min max median eclass size Average nodes per eclass

redundant number system_

smtcoq uses ematching patterns

Dumbest

egg zoo